June 18, 2018

By: Michael Feldman

Sandia National Laboratories will soon be taking delivery of the world’s most powerful supercomputer using ARM processors. The system, known as Astra, is being built by Hewlett Packard Enterprise (HPE) and will deliver 2.3 petaflops of peak performance when it’s installed later this year.

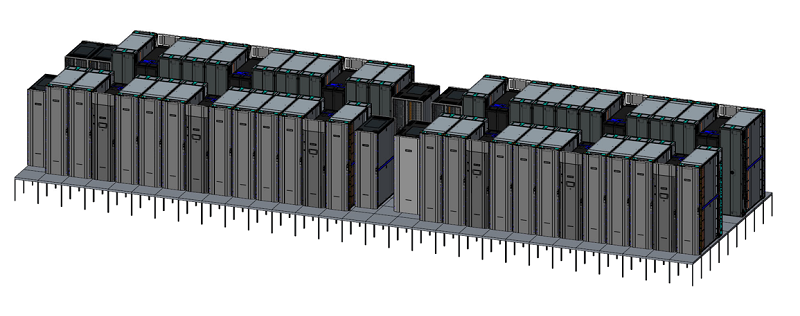

Astra rendering. Source: HPE

“Sandia National Laboratories has been an active partner in leveraging our Arm-based platform since its early design, and featuring it in the deployment of the world’s largest Arm-based supercomputer, is a historical moment not just for us, but for the industry as we race toward achieving exascale computing,” said Mike Vildibill, vice president, Advanced Technology Group, HPE

Astra will be based on HPE’s Apollo 70 system and will be comprised of 2,592 dual-socket nodes, containing 145,000 cores – by far the largest such system the company has delivered. If it was up and running today, it would easily make it into the upper fifth of the TOP500 list.

Each node will be equipped with two 28-core Cavium ThunderX2 processors running at 2.0 GHz. These aren’t the biggest or the fastest of Cavium’s newest ARM processor, but represents something of a sweet spot in price-performance. In aggregate, the compute nodes will draw 1.2 MW of power, which translates into a respectable energy efficiency for a 2.3-petaflop machine.

Local storage will be supplied by Apollo A4520 enclosures, providing 350 TB in the form of an all-flash Lustre appliance. Because of the relatively small capacity and high performance, it will primarily be used for operations needing extreme I/O bandwidth – things like burst buffering and file checkpointing.

Prior to the Astra announcement, most of the other action with regard to ARM-powered HPC was taking place in the United Kingdom. HPE had previously announced that three UK universities (Edinburgh, Leicester, and Bristol) had ordered Apollo 70 clusters, but each of these systems will be outfitted with just 64 nodes and will top out at a mere 74 teraflops. As far as computational capacity goes, the closest thing to Astra is Isambard, a 10,000-core Cray XC50 supercomputer using these same ThunderX2 processors. It’s set to be deployed at the Great Western 4 (GW4) Alliance, a research consortium of four UK universities (Bristol, Bath, Cardiff and Exeter).

Astra’s delivery is the first production deployment of the of the Department of Energy’s (DOE) National Nuclear Security Administration’s (NNSA) Vanguard Project. The project’s mission is to ensure a viable HPC ecosystem is established for ARM technology within the NNSA and the larger DOE community. Besides Sandia, a number of other national labs are involved in the project, including Lawrence Livermore, Oak Ridge, Argonne, and Los Alamos.

Over the next few years, these labs will help fill out the system software stack and perform application porting for various multi-physics codes, with the eventual goal of supporting ARM-based exascale systems at the agency. A number of ThunderX2-powered prototype clusters, based on pre-production Cavium silicon are already running at the labs, and are being used to develop operating system (OS) support, compilers, math libraries, file systems, and other elements of the toolchain. Lawrence Livermore, for example, has already ported the NNSA’s Tri-Lab Operating System Stack (TOSS) to the ThunderX2 platform.

Given the size of Astra, it will be the first ARM system in the world that will be able to run HPC workloads at true supercomputing scale and demonstrate how much computational capacity can be extracted from the hardware. “One of the important questions Astra will help us answer is how well does the peak performance of this architecture translate into real performance for our mission applications,” said Mark Anderson, program director for NNSA’s Advanced Simulation and Computing program, which funds Astra.

Thanks to the local flash storage and the eight memory channels on each ThunderX2 socket, Astra is likely to be especially adept at analytics and other data-demanding codes. In particular, the eight-memory-design represents a 33 percent improvement on Intel’s six-channel implementation of its Xeon Scalable processor. The better bandwidth is one of ThunderX2’s most important differentiating features and represents an attempt to provide a more balanced relationship between compute capacity and memory speed. (Note that you don’t need an ARM processor to this; AMD has the same eight-memory-channel design with its x86 EPYC processor.)

This focus on optimizing data movement is due to the fact that this is where most of the system’s energy is being consumed these days. “We can see clearly that the amount of power required to move data inside the system is an order of magnitude greater than the amount of power needed to compute that data,” explained Vildibill.

That said, the DOE’s main interest in ARM probably has more to do with the fact that it represents a third viable processor architecture for the datacenter and is poised to get much broader industry support. Maybe just as important though, the architecture is driven by an open licensing model than encourages innovation and diversity. And that model has already resulted in a partnership between ARM Ltd and Fujitsu to establish an HPC implementation of ARM, known as the ARMv8-A Scalable Vector Extension (SVE). It’s set to debut in the Post-K supercomputer, Japan’s initial exascale system that is scheduled to be installed at RIKEN in 2021-2022. Future features, such as on-package high bandwidth memory and integrated high-performance interconnects are already being anticipated.

Astra is scheduled for deployment in late summer. It will be installed at Sandia in a part of a datacenter that originally housed the Red Storm supercomputer.