Sept. 6, 2018

By: Michael Feldman

According to the latest analysis from Hyperion Research, the various global efforts to reach exascale supercomputing are making good headway. But in some cases, the decision to develop domestically-produced processors for these systems and the inclusion of new application use cases appears to be stretching out the timelines.

The analysis was presented by Hyperion analyst Bob Sorensen during the company’s latest HPC User Forum event, which was held in Detroit earlier this week. In the presentation, now posted on YouTube, Sorensen provided an update of the exascale development work taking place across the four major supercomputing geographies: the US, China, Japan, and Europe.

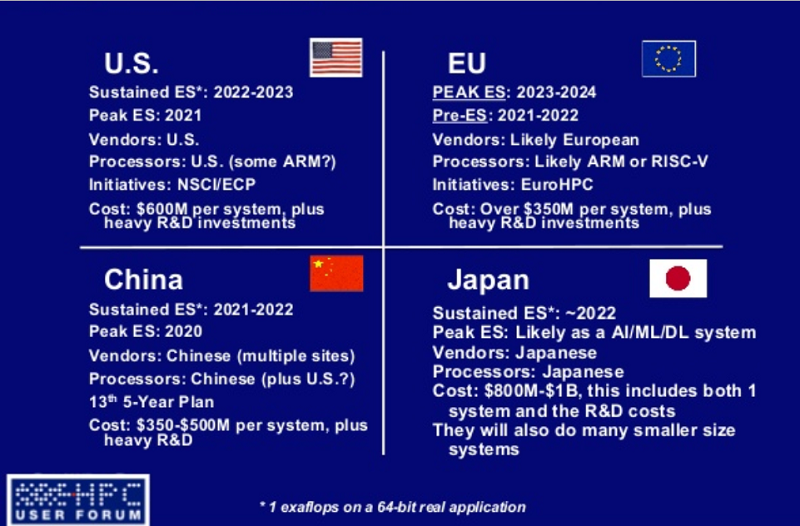

According to Sorensen, Hyperion is still projecting that China will produce a “peak” exaflop supercomputer by 2020, with US following in 2021. None of the efforts is expected to produce a sustained exascale machine until 2021, sustained exascale being defined as one exaflop of 64-bit performance on a real application. The slide (below) breaks down the various efforts with regard to projected system deployment dates, processor technologies, system suppliers, and approximate cost per machine.

Source: Hyperion Research

One thing Sorensen pointed out was that the deployment dates for the European, Japanese, and Chinese systems appeared to be moving out because all three are engaged in significant R&D efforts to build customized HPC processors for their respective systems.

In particular, the deployment dates for the EU machines have pushed out a year or two – to 2023 and 2024 – due to a relatively recent decision to develop domestically produced chips for their first batch of exascale systems. The processor development is being accomplished under the European Processor Initiative (EPI), which, as we reported in July, has just gotten underway. As we noted in that report, the EPI work is expected to result in a general-purpose processor based on the Arm architecture, as well as an accelerator using a RISC-V implementation.

The Chinese exascale effort is focused around three projects, led by the National University of Defense Technology (NUDT), Sunway, and Sugon, respectively. Like the EU systems, these machines will be based on processors designed and manufactured indigenously, most likely based on customized implementations of Arm, x86, and ShenWei architectures. “But we’re starting to see that those programs are slipping,” said Sorensen. “And the early peak exascale ambitions of 2020, I suspect, aren’t going to happen.”

Sorensen speculates that at least part of this delay is related to the new use cases these new Chinese architectures will have to address, namely, the AI/machine learning/deep learning application troika as well as high performance data analytics. As a result, he thinks that it may be 2021 or perhaps even 2022 before China’s first peak exascale system come online, representing a one or two-year delay based on the original schedule.

Japan, meanwhile, is currently on track to put its first exascale supercomputer into production in 2022. That system, known as Post-K, was originally slated to be up and running in 2020, but in 2016, the Japanese had already conceded that they were probably one or two years behind schedule. Sorensen now thinks the 2022 date is rather conservative and they may, in fact, be able to boot up the machine somewhat earlier. Some of that optimism may be related to the fact that the development of the A64FX chip tasked to power the Post-K system is pretty far along, as demonstrated at the recent Hot Chips conference in August.

Ironically, after a somewhat rocky start with questionable government funding, the US exascale plans now look to be on among the most stable. The country’s first peak exascale supercomputer, the A21, is set to deploy at Argonne National Lab in 2021, with additional machines providing sustained exascale performance slated to be installed at other Department of Energy labs in the 2022-2023 timeframe.

Some of this stability can be attributed to the fact that the US has established processor vendors (Intel, NVIDIA, AMD, and IBM), with a long history with high-end chip development, not to mention a choice of multiple system vendors (Cray, IBM, HPE, and Dell) that are able to integrate these chips into cutting-edge HPC machinery. And at this point, the US is already nearly a fifth of the way to exascale with the newly christened Summit supercomputer at Oak Ridge National Lab.

Approximately 92 percent of Summit’s floating point performance is derived from its V100 GPU accelerators, which has already resulted in the world’s first exascale application, albeit at sub-64-bit precision. The Summit application in question is a comparative genomics code that used the innate ability of the GPUs to perform machine learning computations at well above the chip's peak 64-bit FP rating. As Sorensen noted, the suitability of GPUs to greatly accelerate these kinds of lower precision workloads is serendipitously expanding the HPC application landscape and changing how these machines will be used.

“The exascale systems that we are looking at today for 2021 and 2022 may be doing a whole bunch of different classes of applications in a way that we have never really seen before,” explained Sorensen. “We can’t predict what kinds of applications are really going to be driving these systems as they look at sustained performance.”